Abstract

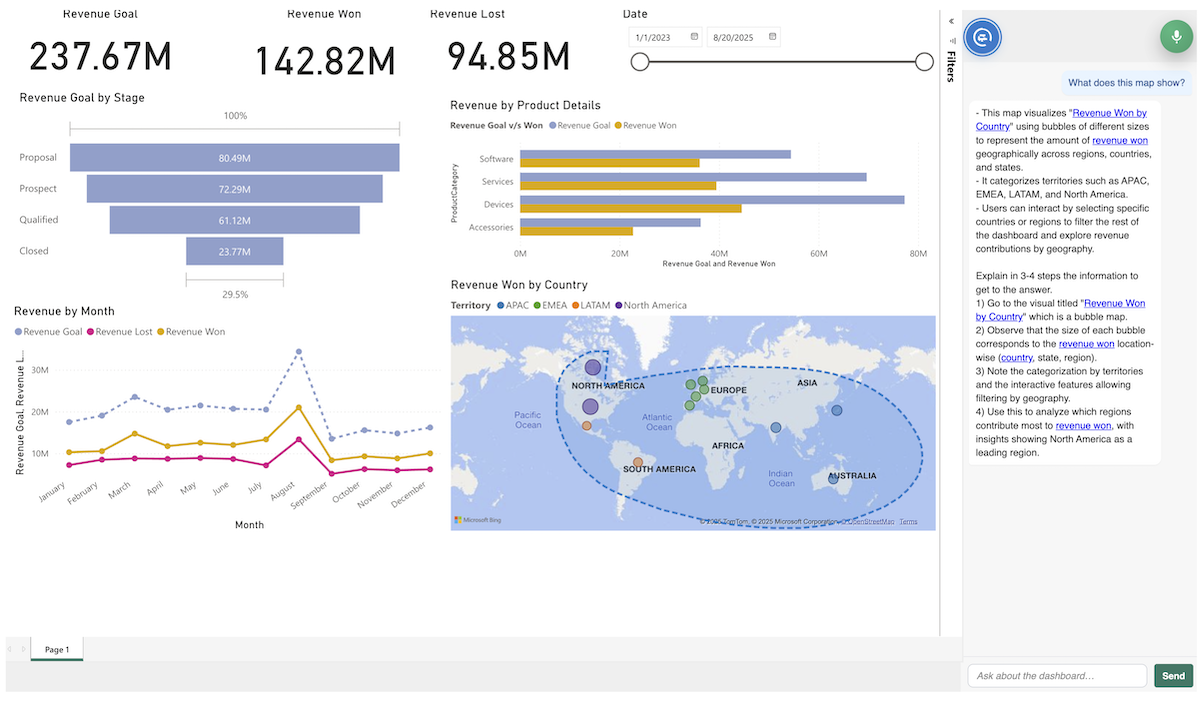

Visualization dashboards are regularly used for data exploration and analysis, but their complex interactions and interlinked views often require time-consuming onboarding sessions from dashboard authors. Preparing these onboarding materials is labor-intensive and requires manual updates when dashboards change. Recent advances in multimodal interaction powered by large language models (LLMs) provide ways to support self-guided onboarding. We present DIANA (Dashboard Interactive Assistant for Navigation and Analysis), a multimodal dashboard assistant that helps users for navigation and guided analysis through chat, audio, and mouse-based interactions. Users can choose any interaction modality or a combination of them to onboard themselves on the dashboard. Each modality highlights relevant dashboard features to support user orientation. Unlike typical LLM systems that rely solely on text-based chat, DIANA combines multiple modalities to provide explanations directly in the dashboard interface. We conducted a qualitative user study to understand the use of different modalities for different types of onboarding tasks and their complexities.

Citation

Vaishali

Dhanoa,

Gabriela Molina León,

Eve Hoggan,

Eduard Gröller,

Marc

Streit,

Niklas Elmqvist

Hey Dashboard!: Supporting Voice, Text, and Pointing Modalities in Dashboard Onboarding

arXiv,

doi:10.48550/arXiv.2510.12386, 2025.

BibTeX

@preprint{2025_hey_dashboard,

title = {Hey Dashboard!: Supporting Voice, Text, and Pointing Modalities in Dashboard Onboarding},

author = {Vaishali Dhanoa and Gabriela Molina León and Eve Hoggan and Eduard Gröller and Marc Streit and Niklas Elmqvist},

journal = {arXiv},

doi = {10.48550/arXiv.2510.12386},

year = {2025}

}