Abstract

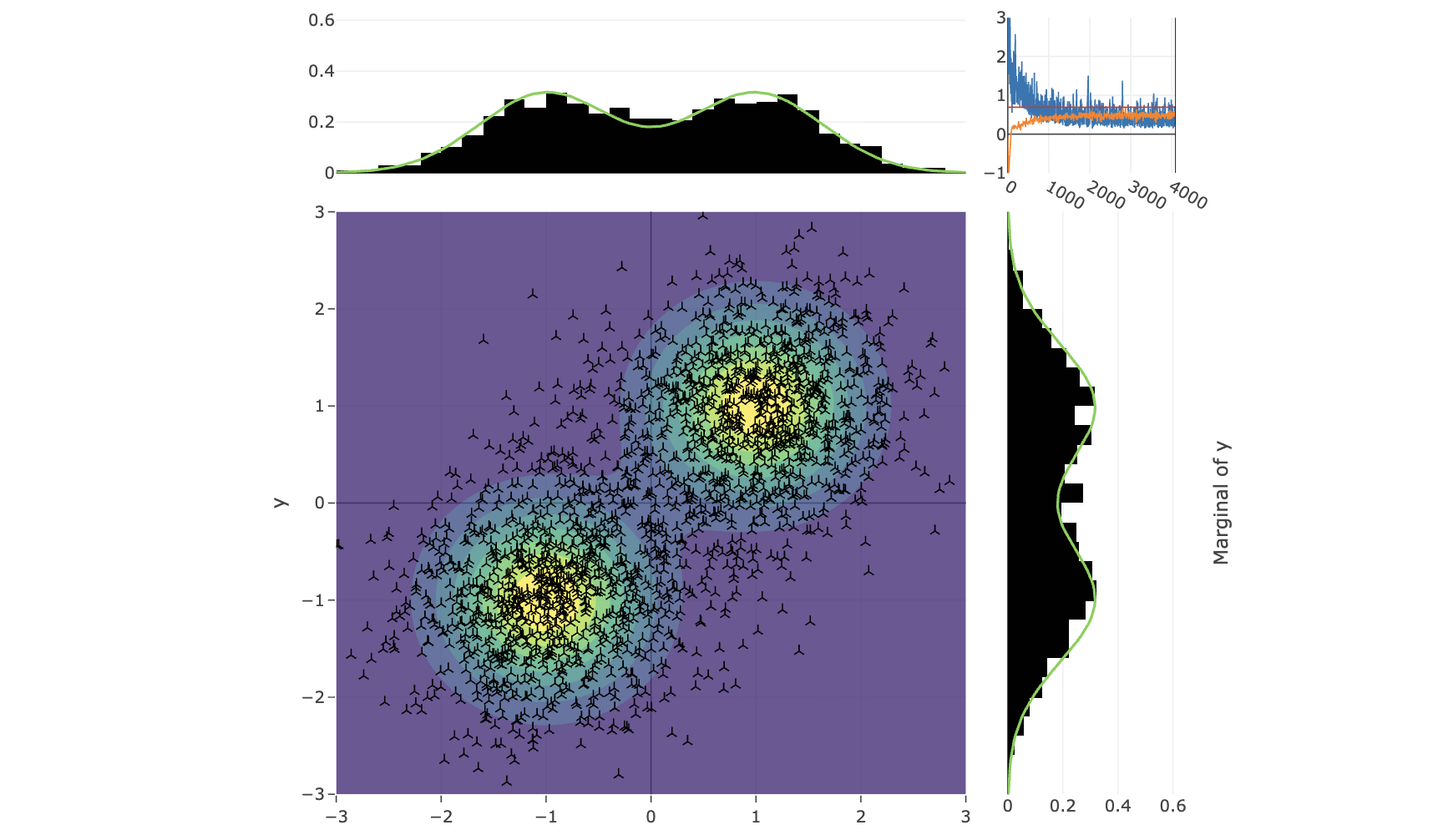

Imagine you want to discover new molecules for a life-saving drug. With an estimated size of 10^60, the space of possible molecular structures is vast, and promising candidates are potentially sparse and difficult to find. Traditional methods might guide you to a single best guess, but what if this guess is toxic, has side effects, or fails in a later stage of testing? What if you need many diverse, high-quality candidates to test? This is where Generative Flow Networks (GFlowNets) come in. They are a class of generative models that don't just aim for a single optimal solution—they aim to diversely sample from a space of possibilities, with a preference for high-reward outcomes. In this article, we introduce the core concepts behind GFlowNets, outline their theoretical foundations and common training pitfalls, and guide readers toward an intuitive understanding of how they work. We provide an interactive Playground, where reward functions and hyperparameters can be adjusted on the fly to reveal a GFlowNet’s learning dynamics. A Tetris example brings these ideas to life, as the network uncovers stacking strategies in real time. By journey’s end, readers will have both a practical grasp of GFlowNet behavior and inspiration for applying them to their own challenges.

Citation

Florian Holeczek,

Alexander Hillisch,

Andreas

Hinterreiter,

Alex Hernández-García,

Marc

Streit,

Christina

Humer

GFlowNet Playground - Theory and Examples for an Intuitive Understanding

Workshop on Visualization for AI Explainability,

2025.

BibTeX

@article{,

title = {GFlowNet Playground - Theory and Examples for an Intuitive Understanding},

author = {Florian Holeczek and Alexander Hillisch and Andreas Hinterreiter and Alex Hernández-García and Marc Streit and Christina Humer},

journal = {Workshop on Visualization for AI Explainability},

year = {2025}

}

Acknowledgements

The work was partyly funded by the Austrian Science Fund under grant number FWF DFH 23–N, Some implementations and ideas are based on great work of others: The continuous line example by Joseph Viviano & Kolya Malkin. The idea for the playground environment is based on their notebook and much of the training code is adapted from their tutorial. The neural network playground by Daniel Smilkov and Shan Carter was an inspiration on how to visualize machine learning and the training progress in the browser. The code for the flow field visualization is adapted from Mathcurious’ implementation.