Abstract

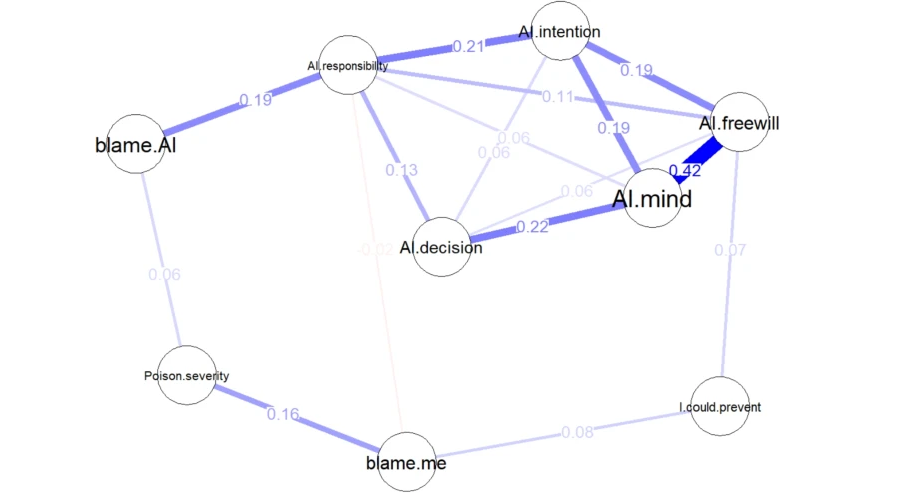

The increasing involvement of Artificial Intelligence (AI) in moral decision situations raises the possibility of users attributing blame to AI-based systems for negative outcomes. In two experimental studies with a total of N = 911 participants, we explored the attribution of blame and underlying moral reasoning. Participants had to classify mushrooms in pictures as edible or poisonous with support of an AI-based app. Afterwards, participants read a fictitious scenario in which a misclassification due to an erroneous AI recommendation led to the poisoning of a person. In the first study, increased system transparency through explainable AI techniques reduced blaming of AI. A follow-up study showed that attribution of blame to each actor in the scenario depends on their perceived obligation and capacity to prevent such an event. Thus, blaming AI is indirectly associated with mind attribution and blaming oneself is associated with the capability to recognize a wrong classification. We discuss implications for future research on moral cognition in the context of human–AI interaction.

Citation

Benedikt Leichtmann,

Andreas

Hinterreiter,

Christina

Humer,

Alfio Ventura,

Marc

Streit,

Martina Mara

Moral reasoning in a digital age: Blaming artificial intelligence for incorrect high-risk decisions

Current Psychology,

43:

32412-32421, doi:10.1007/s12144-024-06658-2, 2024.

BibTeX

@article{,

title = {Moral reasoning in a digital age: Blaming artificial intelligence for incorrect high-risk decisions},

author = {Benedikt Leichtmann and Andreas Hinterreiter and Christina Humer and Alfio Ventura and Marc Streit and Martina Mara},

journal = {Current Psychology},

publisher = {Springer},

doi = {10.1007/s12144-024-06658-2},

url = {https://doi.org/10.1007/s12144-024-06658-2},

volume = {43},

pages = {32412-32421},

month = {November},

year = {2024}

}

Acknowledgements

Open access funding provided by Johannes Kepler University Linz. This work was funded by Johannes Kepler University Linz, Linz Institute of Technology (LIT), the State of Upper Austria, and the Federal Ministry of Education, Science and Research under grant number LIT-2019-7-SEE-117, awarded to MM and MS, the Austrian Science Fund under grant number FWF DFH 23-N, and under the Human-Interpretable Machine Learning project (funded by the State of Upper Austria).