Abstract

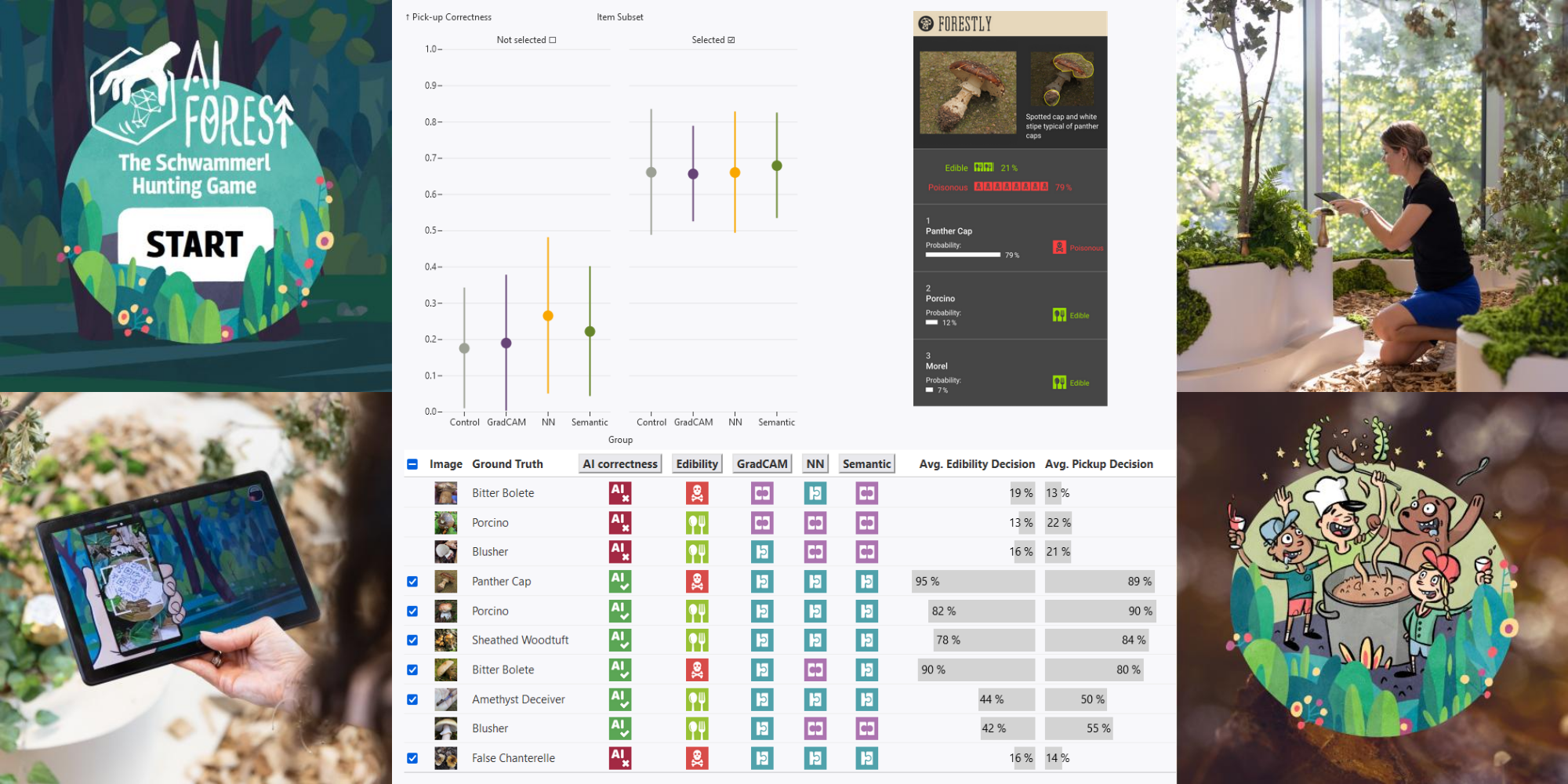

Does explainability change how users interact with an artificially intelligent agent? We sought to answer this question in a transdisciplinary research project with a team of computer scientists and psychologists. We chose the high-risk decision making task of AI-assisted mushroom hunting to study the effects that explanations of AI predictions have on user trust. We present an overview of three studies, one of which was carried out in an unusual environment as part of a science and art festival. Our results show that visual explanations can lead to more adequate trust in AI systems and thereby to an improved decision correctness.

Citation

Andreas

Hinterreiter,

Christina

Humer,

Benedikt Leichtmann,

Martina Mara,

Marc

Streit

Of Deadly Skullcaps and Amethyst Deceivers: Reflections on a Transdisciplinary Study on XAI and Trust

6th Workshop on Visualization for AI Explainability,

2023.

BibTeX

@article{,

title = {Of Deadly Skullcaps and Amethyst Deceivers: Reflections on a Transdisciplinary Study on XAI and Trust},

author = {Andreas Hinterreiter and Christina Humer and Benedikt Leichtmann and Martina Mara and Marc Streit},

journal = {6th Workshop on Visualization for AI Explainability},

url = {https://jku-vds-lab.at/hoxai-at-visxai},

month = {October},

year = {2023}

}

Acknowledgements

The main part of this project work was funded by Johannes Kepler University Linz, Linz Institute of Technology (LIT), the State of Upper Austria, and the Federal Ministry of Education, Science and Research under grant number LIT-2019-7-SEE-117, awarded to Martina Mara and Marc Streit. The “AI Forest” installation and tablet game could be realized by funding through the LIT Special Call for the Ars Electronica Festival 2021 awarded to Martina Mara. We gratefully acknowledge additional funding by the Austrian Science Fund under grant number FWF DFH 23–N, by the State of Upper Austria through the Human-Interpretable Machine Learning project, and by the Johannes Kepler Open Access Publishing Fund.

This project would not have been possible without the support of many highly motivated people. We want to thank Nives Meloni, Birke van Maartens, Leonie Haasler, Gabriel Vitel, Kenji Tanaka, Stefan Eibelwimmer, Christopher Lindinger, Moritz Heckmann, Alfio Ventura, all supporting student assistants and colleagues from the Robopsychology Lab at JKU, Roman Peherstorfer and the JKU press team, Otto Stoik and the members of the Mycological Working Group (MYAG) at the Biology Center Linz, and all involved members of the German Mycological Society (DGfM).